TrueFoundry AI Gateway

Connect, observe & control LLMs, MCPs, Guardrails & Prompts

2.1K followers

Connect, observe & control LLMs, MCPs, Guardrails & Prompts

2.1K followers

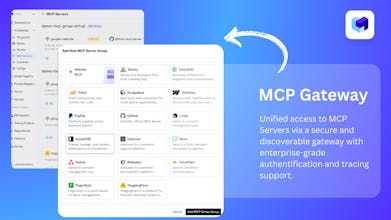

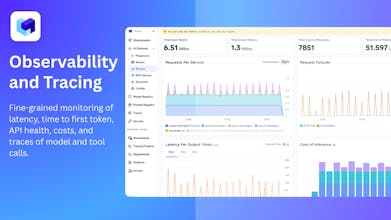

TrueFoundry’s AI Gateway is the production-ready, control plane to experiment with, monitor and govern your agents. Experiment with connecting all agent components together (Models, MCP, Guardrails, Prompts & Agents) in the playground. Maintain complete visibility over responses with traces and health metrics. Govern by setting up rules/limits on request volumes, cost, response content (Guardrails) and more. Being used in production for 1000s of agents by multiple F100 companies!

Interactive

Free Options

Launch Team / Built With

Looks solid. What could be the inherent advantage though against setting up something like LiteLLM which I believe is open source?

TrueFoundry AI Gateway

@vysakh_t Great question! LiteLLM is awesome for getting started, but teams quickly outgrow it as they scale.

What we focus on is everything needed for production-grade, enterprise AI:

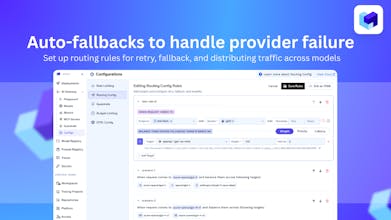

Multi-region + low-latency routing and data residency guarantees

Deep observability — per-tool/per-model traces, guardrail logs, cost metrics, MCP-level telemetry

Enterprise-grade auth (OAuth2, DCR, , RBAC) and secure MCP server management

Reliability features like automatic failover, fallback routing, and rate-limiting by model/team/user

Support + SLAs that large enterprises require when AI becomes mission-critical

LiteLLM is great for experimentation — TrueFoundry is built for operating AI at scale across many teams, regions, tools, and compliance environments.

TrueFoundry AI Gateway

Hello Product Hunt Community, Nikunj - cofounder & CEO.

Today's launch is super close to my heart, not just as a product but as a package of learnings we have been fortunate to accumulate working with Enterprises. Those who have been building at cutting edge, and have shown the courage to reverse decision they previously believed to be correct. Best illustrated through an example of a real customer conversation with timelines.

June 2024: Customer (building a scaled use case): "We will never use Gateway. It lies on the critical path of the request and is such a thin layer that we will own this part ourselves. Model inferencing, GPU management is a different story".

Sep 2024: Customer (As Sonnet, o1 were getting launched): "These APIs keep changing, models keep getting outdated - Gateway is becoming a pain to maintain"

Nov 2024: Customer (As other teams started to ask for inferencing): "Its one thing to support one use case through the Gateway but as we are becoming a platform, now we need a lot more visibility and control layer".

Feb 2025: Customer (As the gateway went down and they started losing $6k / second): !

May 2025: Customer (As MCPs started becoming popular): "Its becoming impossible to catch up with the market, scale the Gateway reliably, and add the right controls for satisfying varying requirements from different teams. @nikunj_bajaj are you all continuing to build the Gateway?"

May 2025: Nikunj: yes, we are.

June 2025: Customer: Started migrating prod traffic to TrueFoundry AI Gateway.

July 2025: Customer: Observability, governance controls became a critical part of their workflow.

Oct 2025: Customer: First MCP driven application launched to prod.

Nov 2025: Customer (over a dinner): I remember having a conversation with you about 1.5 years back thinking we will run our own Gateway. Glad we shifted :)

We have learnt so much in terms of how to build the right design on our AI Gateway, access controls, authentication / authorization and making it compatible with existing Enterprise stacks on MCP Gateway, very difficult data residency requirements, how certain guardrails don't "just work" etc. etc.

And this launch marks the joint success of our collaboration with many other early adopters of TrueFoundry who have helped us build and shape our AI Gateway. Cheers to our customers.

Ztalk.ai

Finally, an AI Gateway that brings order to the LLM chaos! Love the unified API access and critical cost controls like semantic caching. Congrats @agutgutia and TrueFoundry team!

TrueFoundry AI Gateway

@kshitijdixit9 Really appreciate it! A lot of teams are using the Gateway for cost visibility, deep observability and monitoring, and consolidated billing - all the things that get painful once you scale LLM usage. Glad the value resonates!

Okara

congratulations on the launch Anuraag!

TrueFoundry AI Gateway

@fatima_rizwan Thank you for your support!

This is one of the strongest interpretations of the MCP standard I’ve seen yet.

While most implementations simply expose tools, you’ve built a managed layer that agents can reliably operate through. With secure tool access, permissioning, and consistent interfaces, this has the potential to become a solid backbone for enterprise-scale agent ecosystems. Really looking forward to seeing where the roadmap goes from here!

TrueFoundry AI Gateway

@nitesh_shakya

You’ve captured exactly what we’re aiming for. Most MCP integrations today stop at exposing tools, but real enterprise agent systems need a governed, reliable, and observable execution layer. That’s why we’re investing heavily in:

Secure tool access + permissioning, so agents only invoke the capabilities they’re allowed to.

Enforcing guardrails, logs, metrics, and auditability by default, which becomes critical once multiple agents start chaining actions.

A shared backbone for agent-to-agent communication (A2A) - something we’re excited to expand on in the roadmap.

As teams move toward production-grade agent ecosystems, having this stable foundation becomes the difference between a cool demo and a system you can actually trust in operations.

We’re thrilled to keep pushing the roadmap forward - especially around agent registry, A2A protocol, semantic caching, and guardrails for MCP servers. Really appreciate the thoughtful note 🙏 Sharing our public roadmap here as well - https://www.truefoundry.com/roadmap

Congrats on the launch. Having a unified OpenAI compatible endpoint across all LLM providers is a game changer for devs!

TrueFoundry AI Gateway

@dheerajmundhra Thanks for the support! Do try it out here https://www.truefoundry.com/ai-gateway and share feedback!

Really cool work! Excited to see its growth!! What is the most common Guardrail rule F100 companies use to prevent unexpected agent recursion or tool-call looping?

TrueFoundry AI Gateway

@swecha_sanjay07 thanks! The most common pattern we see to prevent recursion or tool-call loops is simply setting limits on how many times an agent is allowed to invoke tools within a single run.

Most teams start with straightforward guardrails like:

max tool-call depth (e.g., don’t allow a tool to trigger another tool more than N levels deep)

max tool-call count per request (stop execution once a threshold is hit)

These guardrails catch almost all accidental loops without needing anything more complex.