TrueFoundry AI Gateway

Connect, observe & control LLMs, MCPs, Guardrails & Prompts

2.1K followers

Connect, observe & control LLMs, MCPs, Guardrails & Prompts

2.1K followers

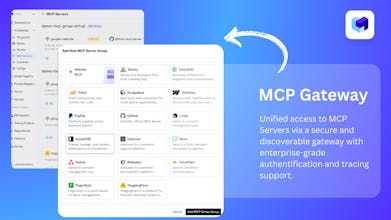

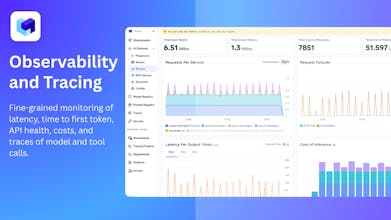

TrueFoundry’s AI Gateway is the production-ready, control plane to experiment with, monitor and govern your agents. Experiment with connecting all agent components together (Models, MCP, Guardrails, Prompts & Agents) in the playground. Maintain complete visibility over responses with traces and health metrics. Govern by setting up rules/limits on request volumes, cost, response content (Guardrails) and more. Being used in production for 1000s of agents by multiple F100 companies!

Interactive

Free Options

Launch Team / Built With

Really cool! Quick question — how does fallback actually work under the hood? Does the gateway retry with a secondary model automatically?

TrueFoundry AI Gateway

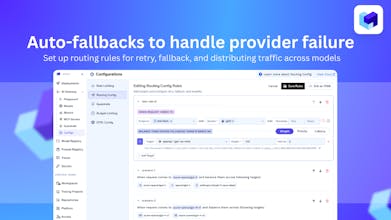

@bhavesh_patel1 You need to enable routing config for enabling fallback. If a model fails with a non-retriable error (401, 403, 5xx) - we fallback to the fallback models. If there is a spike in errors in the model, we mark that model unhealthy and it is sent to "cooldown" mode for 5 minutes.

I have used OpenRouter, LiteLLM, Vercel AI Gateway, how is it better than those? Just trying to understand, specifically from a developer perspective.

TrueFoundry AI Gateway

@iamshnik OpenRouter allows you to talk to different models without signing up on different providers. Vercel AI gateway works similarily for models.

Truefoundry and LiteLLM are AI gateways where user brings their own keys and this can be self-hosted within the enterprise. We also provide complete observability by default, load-balancing, rate-limiting configs that can automatically switch your application when a model provider is down. It also adds a prompt management layer where in the gateway itself can substitute the prompt for you, making your client side code very small. It also enables you to talk to multiple MCP servers with a single token - so that as a developer you don't need to worry about implementing token management

PROCESIO

congrats!!!

TrueFoundry AI Gateway

@madalina_barbu Thank you for your support!

Reindeer

Great work on the launch!

Curious…how seamless is it to integrate existing internal copilots or agents without modifying their current workflows?

TrueFoundry AI Gateway

@_muzammil_kt integrates seamlessly with any copilot or agent - anything built on OpenAI compatible endpoints - without requiring changes to your existing workflows.

Exciting stuff! Can you please share the roadmap?

TrueFoundry AI Gateway

@akshay_siroya1 We’ve published our public roadmap to give the community full visibility into what we’re building across the AI Gateway, MCP ecosystem, and Agent platform.Please find it here - https://www.truefoundry.com/roadmap. Our focus is on agent registry, OpenAPI-to-MCP conversion, A2A protocols in the next coming months.

If you have ideas, feedback, or features you’d love to see, please drop them in - your input directly shapes what we prioritize next.

As an ML engineer who’s built and deployed multiple LLM systems in production — LangGraph agents, RAG pipelines, and fairly involved evaluation/observability layers — the hardest part has always been the infrastructure glue:

managing multiple model providers

keeping logs and tracing consistent

tracking costs

maintaining prompt versions without breaking apps

What I like about TrueFoundry’s AI Gateway is that it actually solves these operational headaches cleanly. The unified API, multi-provider routing, centralised logging, cost/latency tracking, RBAC, and prompt versioning remove a huge amount of engineering effort that teams usually end up duct-taping together. It’s the kind of layer you wish existed when you’re trying to scale LLM usage across different teams and use-cases.

Really excited to see this launch — it fills a very real gap in the modern AI stack.

TrueFoundry AI Gateway

@aditi_gupta35 Thank you so much - you articulated the pain better than we could. The goal of the Gateway was exactly this: remove the hidden ops burden around providers,MCPs, logs, costs, and prompt versions so teams can actually focus on building.

Really glad it resonates with someone who’s lived these problems firsthand!

Looks like a good platform for the AI governance. One api key, one endpoint, multiple models, consistent logs + metrics. This saves so much mental overhead.

TrueFoundry AI Gateway

@raveena_venugopal That mental overhead you mentioned is exactly what kept coming up in our conversations with teams. The moment you go from “one model in dev” to “multiple models + environments + guardrails + logs,” everything suddenly feels scattered and hard to govern.

We wanted the Gateway to feel like turning chaos into one clean control panel:

One API key and endpoint - no more juggling provider SDKs

Unified logs + metrics - finally compare behaviour across models

Central governance → permissions, cost controls, guardrails all in one place