NexaSDK for Mobile

Easiest solution to deploy multimodal AI to mobile

643 followers

Easiest solution to deploy multimodal AI to mobile

643 followers

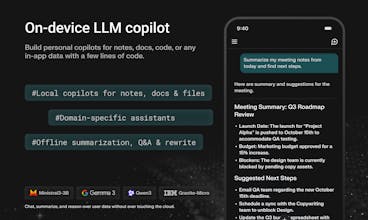

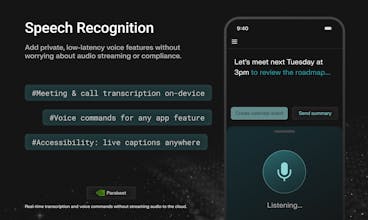

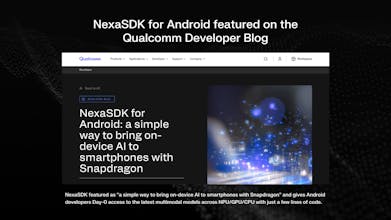

NexaSDK for Mobile lets developers use the latest multimodal AI models fully on-device on iOS & Android apps with Apple Neural Engine and Snapdragon NPU acceleration. In just 3 lines of code, build chat, multimodal, search, and audio features with no cloud cost, complete privacy, 2x faster speed and 9× better energy efficiency.

Congrats on the launch, Team!

This is very impressive!

I am currently building a mobile-first voice-to-app builder and I'm going to try NexaSDK immediately for voice mode speech recognition.

Quick question on the licensing/usage model: Can I use this SDK to embed it into the apps I generate for my users? Specifically, will I need a different NEXA_TOKEN for each user-generated mobile app, or does the SDK support a single token/license for a platform generating multiple apps?

NexaSDK for Mobile

@alexander_ostapenko2 Thanks Aleksandr for the support. NexaSDK is perfect for your use case. We support the best on-device ASR model, Parakeet v3, on Apple and Qualcomm NPUs. It can provide battery-efficient and fast inference for your task.

Let's book a call and I will walk you through how licensing work: https://nexa.ai/book-a-call

@alanzhuly thank you, Alan! Yep, let's have a call!

Swytchcode

Amazing. Do you also manage version control and git commits?

NexaSDK for Mobile

@chilarai Thanks! Not directly — NexaSDK isn’t a Git/version-control tool. We integrate into your existing workflow (GitHub/GitLab, CI/CD). What we do handle is model AI inference. Please feel free to let me know if you have any other feedback or questions.

Swytchcode

@alanzhuly Sure, I'll do

Congrats on the launch! Using models locally is always a better choice in terms of privacy, still i want to know more about the privacy and security you are providing.

NexaSDK for Mobile

@anishsharma Thanks Anish. Yes, local AI is the perfect choice for privacy. NexaSDK is 100% local and offline and none of your data will leave your device when running AI models. Please feel free to let me know any other feedback or questions.

What are the specific cases where on-device AI gives a real advantage over the cloud model?”

NexaSDK for Mobile

@lmadev Great question. On-device wins when you need (1) privacy by default (camera/mic/screenshots/health data), (2) offline or unreliable network (travel, field work), (3) real-time latency (live camera features, voice agents, AR), and (4) predictable cost at scale (no per-request cloud bill).

Examples: Always-on voice commands that work in airplane mode, and local semantic search over personal files/messages with data never leaving the phone. Cloud still makes sense for the heaviest reasoning—many apps end up hybrid.

Please feel free to let me know if there's any other feedback or questions.

TarotRead AI

Really exciting work on bringing ondevice AI to mobile, love the focus on performance and privacy.

How smooth is the integration with existing iOS/Android apps? Any recommended examples or best practices to help developers get started quickly? I might actually use it in my new app :)

NexaSDK for Mobile

@nilni Thanks Nil for the support. With just 3 lines of code you get integrate it into your apps. Check out our quickstart:

Android: https://docs.nexa.ai/nexa-sdk-android/overview

iOS: https://docs.nexa.ai/nexa-sdk-ios/overview

Excellent solution for on-device AI! The focus on privacy and energy efficiency is critical for mobile adoption. Love the practical approach with 3 lines of code and direct integration with iOS/Android. This unlocks so many possibilities for enterprise mobile apps.

NexaSDK for Mobile

@imraju Thanks Raju for your support. Please feel free to let me know if you have questions or feedback while trying out NexaSDK

MeDo by Baidu

I‘m gonna try this with my new iPhone :D

How does NexaSDK handle memory constraints, power efficiency, and thermal limits on mobile devices?

NexaSDK for Mobile

@cheng_ju1 Awesome! Please let us know your feedback. NexaSDK optimizes models so that they can fit in a mobile device with memory constraints. And also NexaSDK can run the models on NPU, which is 9X more energy efficient than other SDKs who are using CPU only for model inference.