NexaSDK for Mobile

Easiest solution to deploy multimodal AI to mobile

643 followers

Easiest solution to deploy multimodal AI to mobile

643 followers

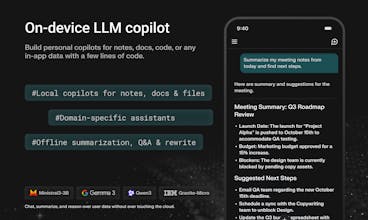

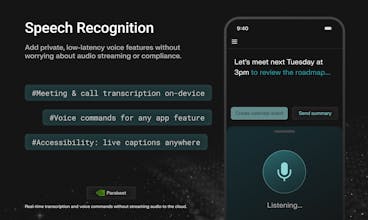

NexaSDK for Mobile lets developers use the latest multimodal AI models fully on-device on iOS & Android apps with Apple Neural Engine and Snapdragon NPU acceleration. In just 3 lines of code, build chat, multimodal, search, and audio features with no cloud cost, complete privacy, 2x faster speed and 9× better energy efficiency.

BizCard

Any plans for on-device guardrails / safety filters? (Especially for multimodal inputs)

Hi, I'm curious—what kinds of AI models does NexaSDK actually support out of the box? Are we talking language models, vision, speech, or all of them? And when it comes to running these models on device, is there a minimum RAM requirement you recommend for smooth performance? Would love to know before diving in—thanks!

Hi, so this is basically a tool that has libraries that make AI integrations easier? Could u pls tell me what this does in 1 sentence?

Great project. Can you process video streams, or do you just break them into frames, turn the video into photos, and then recognize them?

Wow, NexaSDK for Mobile looks incredible! The on-device AI with no cloud costs is a total game changer. Super curious, how does it handle model updates & maintenance in offline environments?

Really interesting approach to run AI fully on-device. This could be a big win for apps that care about user privacy and offline performance. Is this mainly targeted at consumer apps or enterprise use cases as well?

This is a massive unlock for indie devs! 🤯 The unpredictable cost of cloud API bills is always a blocker for building B2C AI apps. Moving inference on-device with just '3 lines of code' sounds like a dream for keeping margins healthy.

Congrats on the launch! I'm really curious about the trade-offs though—how significant is the impact on the app bundle size when integrating NexaSDK? Upvoted!