W&B Training by Weights & Biases

The fast and easy way to train AI agents with serverless RL

7 followers

The fast and easy way to train AI agents with serverless RL

7 followers

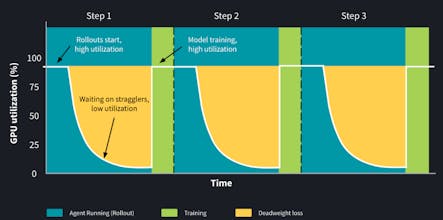

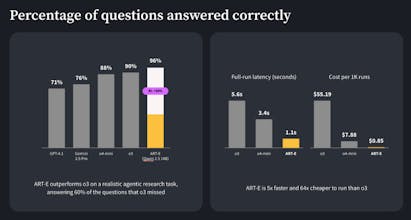

W&B Training offers serverless reinforcement learning for post-training large language models to improve their reliability performing multi-turn, agentic tasks while also increasing speed and reducing costs.

Payment Required

Launch Team

Framer — Launch websites with enterprise needs at startup speeds.

Launch websites with enterprise needs at startup speeds.

Promoted

Hunter

📌Report