Hey!

AI-Powered Pair Programming Friend! ✨

386 followers

AI-Powered Pair Programming Friend! ✨

386 followers

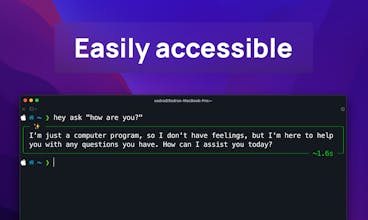

Hey is a free and open-source CLI tool for Linux, Mac, and Windows users that seamlessly integrates powerful Large Language Models (LLMs) for a delightful development experience. Hit it with your issues and bugs and let it shine with solutions! 💡

TTSynth.com

Hey!

BlissBox

Hey!

Hey!

Free AI Image Extender

Hey!

Startup Death Clock

Hey!

Telebugs

Hey!

Raycast

Raycast

Hey!